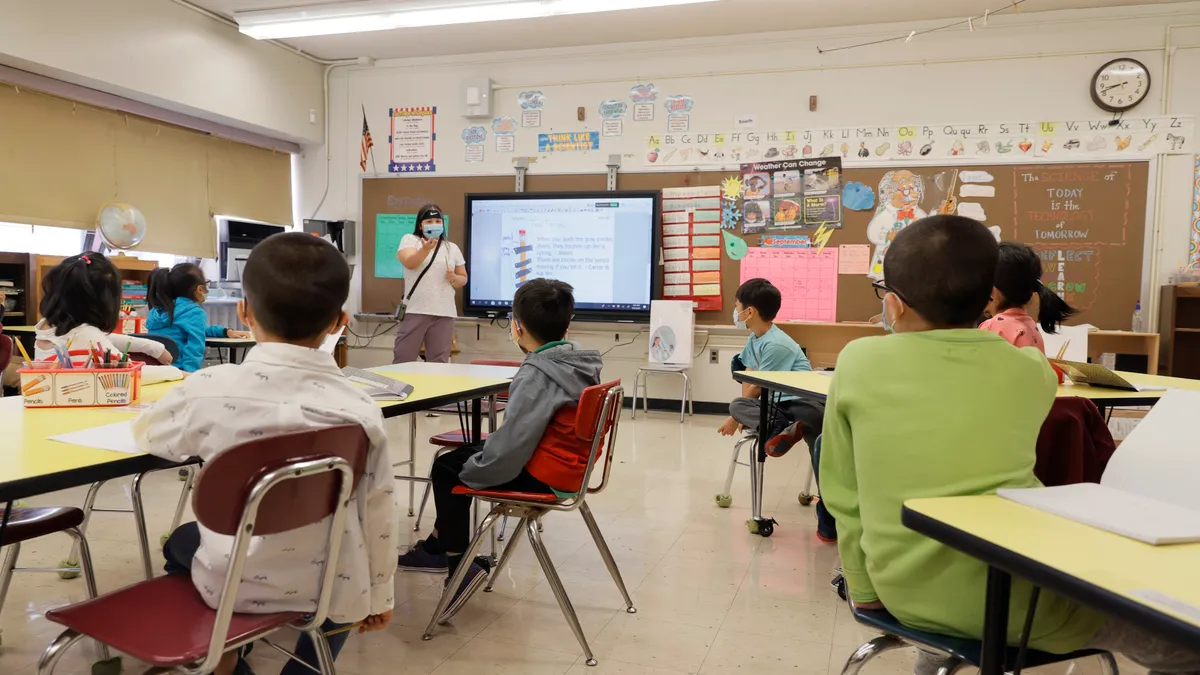

Spring has long been known as testing season in school districts across the country. But in recent years, some educators have started to feel as if testing season lasts from September to June.

“Kids are being tested all year, all the time,” says Bootsie Battle-Holt, a 7th and 8th grade math teacher at Marina Del Rey Middle School and Performing Arts Magnet in the Los Angeles Unified School District (LAUSD). “For the kids, it gets to the point that they are so saturated with assessments that they are not always doing their best.”

On top of the Smarter Balanced Assessment Consortium (SBAC) tests required as part of the California Department of Education’s academic accountability system, Battle-Holt says giving periodic SBAC assessments during the school year is also “highly encouraged” by the district. Her frustration, however, is that while those interim tests might serve as a good preview of the computer-based assessments for the students, the results aren’t really telling her anything about what students know.

The periodic tests, she says, “are good for students to see the kinds of test questions that they’ll get and for manipulating the technology, but in terms of data, we’re not getting what we need.” And, Battle-Holt’s experiences reflect a testing issue that affects school districts across the country.

“The reality that a lot of people don’t want to admit is that the state has a relatively minor part in this over-testing thing,” says Scott Marion, the executive director of the Dover, N.H.-based National Center for the Improvement of Educational Assessment. “People need to critically evaluate every assessment they are giving outside of regular classroom practice and ask the hard questions. Why are we doing this? What purpose is it intended to serve? Is it honestly delivering on its purposes? And is it redundant with other information?”

Focus on putting valuable ‘minutes back into the classroom’

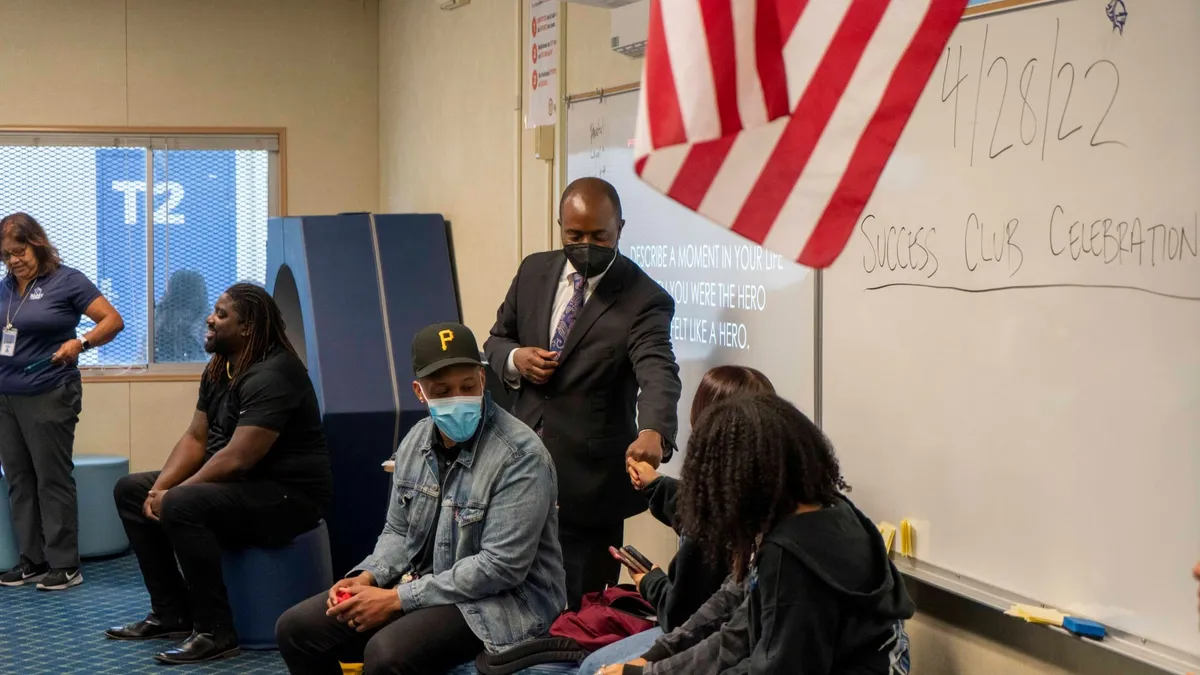

That’s what some large metropolitan school districts have been trying to do. Over the past three years, Miami-Dade County Public Schools has eliminated hundreds of tests that were assessing students in the same grade levels and in the same content areas covered by the state testing program. And the fact that none of the district’s schools received an F in the Florida Department of Education’s grading system this year is an indication that district officials took the right direction, says Gisela Field, the administrative director of the district’s Assessment, Research, and Data Analysis department.

“We put minutes back into the classroom,” Field says, adding that not only did fewer tests lead to more instructional time, but that it also allows “more time for students to be pulled out and to be provided with very specific instruction on areas where they were lacking.”

Some school systems began to cut back on locally mandated tests not long after the Council for the Great City Schools (CGCS) released a study showing that among 66 large urban school districts, students take an average of 112 tests between prekindergarten and 12th grade, and that figure doesn’t include optional tests, diagnostic tests for English learners and students with disabilities, or assessments designed by teachers.

“I don’t know what districts responded to our comprehensive study by cutting back on testing, but I’m sure the study probably played a part,” says Henry Duvall, CGCS’ director of communications.

Moreover, time spent on assessment can include not only how many hours students are actually taking tests, but also how much time teachers are spending on preparation. About a year before the CGCS report, Teach Plus, a nonprofit organization that supports teachers’ involvement in policy and leadership, released a study in which teachers at the elementary level estimated the time spent on preparing for tests to be twice the time allotted in school district calendars. “The Student and the Stopwatch,” included data from 32 districts and surveys of over 300 teachers in six districts. It showed that urban districts spend more time on testing than suburban districts.

“Instead of possibly doing projects or more hands-on learning, we really focused on the testing format and preparing our students to be comfortable taking the test,” said one teacher quoted in the report. “The prepping starts at the beginning of the year and ends in April.”

For instance, Field says that while no one has called her office to say thank you, she says she has definitely noticed fewer complaints about the amount of time teachers are devoting to testing and test preparation.

State vs. local takes on testing balances

Some district leaders, however, still think states bear some of the responsibility for limiting standardized testing. Keith Kline, the superintendent of the West Clermont Local School District, east of Cincinnati, is one example of an administrator who has more confidence in locally administered assessments than the ones required by the Ohio Department of Education (ODE).

“While I appreciate the recent attention and move to reduce the amount of time spent testing, this reduction needs to focus on the high-stakes state tests and not the diagnostic assessments that will actually improve daily instruction in classrooms,” he wrote in a letter on the district’s website, adding that he was urging parents to contact state legislators if they were frustrated about how many tests their children take.

He also said that the “diagnostic assessments” used by the district are “incredibly valuable to our teachers as they plan their instruction and work with their students both in large and small group settings.”

As with most states currently crafting their accountability plans for the federal Every Student Succeeds Act, the number of tests that will be required are a central focus of that work in Ohio. State Superintendent Paolo DeMaria is recommending that the state eliminate three exams that are not required by the federal government. A special committee focusing on assessment, however, wanted to see even more tests taken off the calendar.

“We really have some broader, deeper questions of what should assessment look like in the future,” said John Richard, ODE deputy superintendent of ODE. He added that some of those same committee members will now participate in a strategic planning work group that will continue to work on assessment issues. The department, he said, hopes to conduct assessment audits to look at where state and local tests might be redundant or where tests might serve multiple purposes, and he added that eliminating specific state assessments could have a ripple effect at the district level with districts no longer administering benchmark or interim tests related to those state tests.

Districts’ preference for interim assessments, such as the Measures of Academic Progress from the Northwest Evaluation Association (NWEA), over state-mandated tests is also an issue in Minnesota. This past spring, the state’s Office of the Legislative Auditor issued a report finding that “administering state-required standardized tests strains the resources of many school districts and charter schools,” and that many teachers and principals don’t feel that they have the expertise to interpret the results of the Minnesota Comprehensive Assessments (MCA) anyway.

Two years ago, the state put a cap on the number of hours schools can test students, but the MCA tests were exempted from that restriction, so districts instead had to drop tests that they felt were more useful. “It gets back to we only have so many hours with our students and what’s the best use of those hours?” asks Gary Amoroso, the executive director of the Minnesota Association of School Administrators.

Some schools even add an additional layer of tests beyond what districts require, notes Paul Changas, the executive director for research, assessment and evaluation for Metropolitan Nashville Public Schools (MNPS). His district is also trying to “get a handle” on the amount of testing being conducted by surveying teachers and principals and forming focus groups to discuss the purposes of each test.

Marion adds that just because districts or schools say they intend to use additional assessments to plan instruction during the year doesn’t mean that they do. And even if they don’t use interim or predictive tests, he says there are more reliable ways of determining if students are meeting standards during the year. “One of the most powerful things,” he says, is implementing formative assessment strategies, such as “high-quality performance tasks” and giving teachers an opportunity to work together to examine students’ work. He adds, however, that it requires time, expertise and hard work.

Changas adds that performance tasks are difficult to score on a “large-scale basis,” but formative assessment tends to be viewed by teachers as more connected to their instruction. In fact, one finding in the Teach Plus survey was that when tests were “properly used in conjunction with the curriculum,” teachers saw the amount of time spent on those assessments as less of a factor.

Battle-Holt, for example, uses “task-based” math lessons from organizations such as LearnZillion, the Mathematics Assessment Resource Service and Illustrative Mathematics. Not only is she “getting that data as the kids are working,” but she says she feels these types of assessments better prepare students for the performance task portion of the state test.

Changas says MNPS hopes to strengthen teacher’s skills in using formative assessment. This fall, the district will make some performance tasks available for grades 3-11 in English language arts and math that he hopes will not only help students prepare for state exams, but will also be useful for teachers in better understanding their students’ strengths and weaknesses. The district, he says, won’t collect the results, but the tasks, he says could be a “great professional development piece” for teachers.

“I hope we find a little balance,” he says. “The formative assessment practices are where we have the opportunity to really raise student achievement.”