Dive Brief:

-

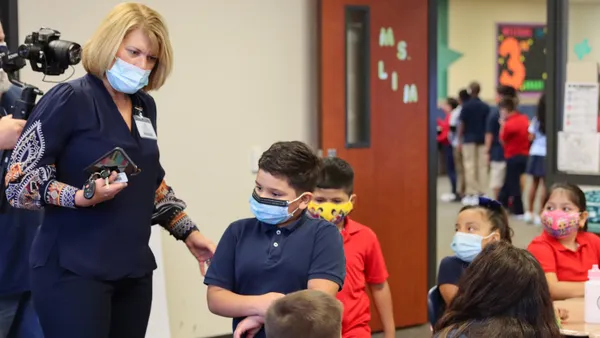

Lockport City School District in upstate New York paused plans to test recently installed facial recognition software as the state looks into whether necessary data protection and student privacy safeguards are in place, EdScoop reports.

-

The technology was purchased with $3.8 million from a Smart Schools Bond Act state grant alongside other measures like bullet-proof greeter windows, panic buttons and a visitor badging system — and while the facial recognition software is already installed on the security cameras, the district is waiting for state approval before using that part of the system.

-

While the district's website states that the New York State Education Department approved the purchase in November 2017, the agency says it is still in the process of finishing the privacy and security regulations it would require the district to adopt.

Dive Insight:

The ongoing threat of school violence has left districts scrambling to protect students and staff. Facial recognition software seems like a good answer, detecting the faces of people who are considered a threat and shouldn’t be on school grounds, such as expelled students. The technology is also able to detect guns and immediately alert police and trigger a lockdown if one is detected.

Lockport City School District defends its decision to implement facial recognition software on the grounds that every second is critical when students are under attack. But many feel such software goes too far by collecting data on students who haven’t done anything wrong. Privacy and security advocates also raise concerns that stolen facial recognition data could put a students’ identity at risk, especially with school districts now among hackers’ most popular targets.

While facial recognition is hitting the education market in a big way, it is still mostly unregulated, and some companies are using data collected to target consumers.

The American Civil Liberties Union says schools should think twice about installing facial recognition software because it is prone to sabotage and threatens privacy. The ACLU also cites false matches, discrimination and the consequences of the school-to-prison pipeline that is fed when students are funneled into the justice system. In addition to those concerns, it can also contribute to a hardened environment that feels more akin to a prison than a warm, welcoming place to learn.

Unfortunately, facial recognition software alone can ultimately create a false sense of security. The technology is best used to prevent issues with known threats, such as a parent with a restraining order or a known sex offender. It would not, however, prevent incidents like the recent shooting at the Virginia Beach municipal building where 12 people were killed. In that instance, the shooter was an employee who had just resigned via email that morning, and his badge had not be deactivated because there was no reason to consider him a threat.